NeuralHermes-2.5-Mistral-7B

NeuralHermes-2.5-Mistral-7B: Unleash unparalleled AI capabilities with Mistral's 7B parameters for advanced natural language processing.

Developer Portal : https://api.market/store/bridgeml/mlabonne

NeuralHermes 2.5 - Mistral 7B

NeuralHermes is based on the teknium/OpenHermes-2.5-Mistral-7B model that has been further fine-tuned with Direct Preference Optimization (DPO) using the mlabonne/chatml_dpo_pairs dataset. It surpasses the original model on most benchmarks (see results).

It is directly inspired by the RLHF process described by Intel/neural-chat-7b-v3-1's authors to improve performance. I used the same dataset and reformatted it to apply the ChatML template.

The code to train this model is available on Google Colab and GitHub. It required an A100 GPU for about an hour.

Quantized models

EXL2:

Results

Update: NeuralHermes-2.5 became the best Hermes-based model on the Open LLM leaderboard and one of the very best 7b models. 🎉

Teknium (author of OpenHermes-2.5-Mistral-7B) benchmarked the model (see his tweet).

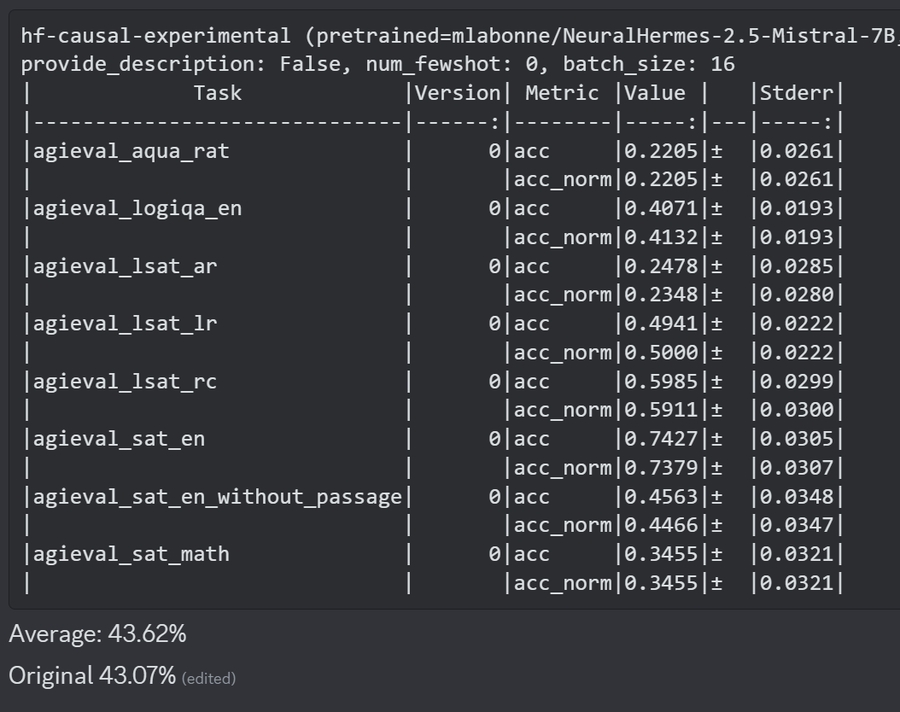

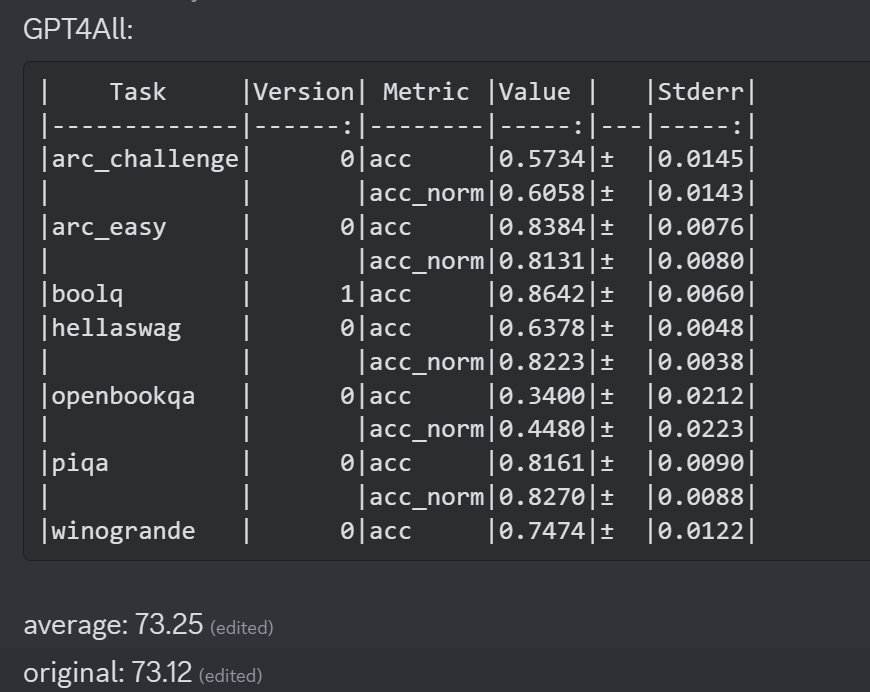

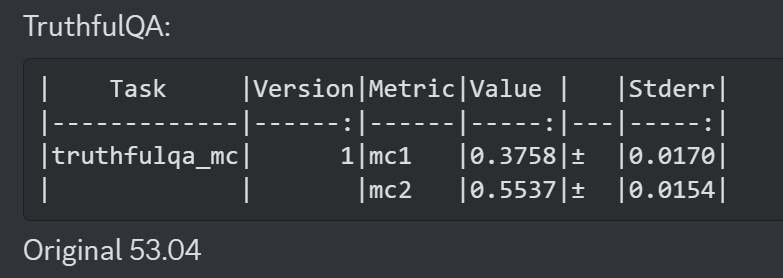

Results are improved on every benchmark: AGIEval (from 43.07% to 43.62%), GPT4All (from 73.12% to 73.25%), and TruthfulQA.

AGIEval

GPT4All

TruthfulQA

You can check the Weights & Biases project here.

Training hyperparameters

LoRA:

r=16

lora_alpha=16

lora_dropout=0.05

bias="none"

task_type="CAUSAL_LM"

target_modules=['k_proj', 'gate_proj', 'v_proj', 'up_proj', 'q_proj', 'o_proj', 'down_proj']

Training arguments:

per_device_train_batch_size=4

gradient_accumulation_steps=4

gradient_checkpointing=True

learning_rate=5e-5

lr_scheduler_type="cosine"

max_steps=200

optim="paged_adamw_32bit"

warmup_steps=100

DPOTrainer:

beta=0.1

max_prompt_length=1024

max_length=1536

Request and Response

Request

Response

You can use this easy to use and cheap LLM Api here at https://api.market/store/bridgeml/mlabonne

Last updated